- Leveraging Dedicated Hosting by Pebble Host

- Jonathan Haack

- Haack’s Networking

- webmaster@haacksnetworking.org

//backupnode//

Latest Updates: https://wiki.haacksnetworking.org/doku.php?id=computing:backupnode

As I discussed in the last post, the sudden server failure and/or link failure that happened a month ago were a source of concern for me. After all, in addition to having my own infra there and that of my clients, I also volunteer and host floss instances of the PubGLUG community. So, there are more than just monetary reasons to desire high availability. There are many ways to do this and minimize or practically remove down time, but I wanted this solution to match my current setup at Brown Rice Data Center in order that, in the case of failure, I could move files to the hot spare and spin up the same ecosystem on a different node. That ecosystem is comprised of qemu+virsh and details can be found here. I don’t want my first node and hot spare at a different site to require the complexity and/or responsibilities of clustering the hardware (e.g., kubernetes) and/or mirroring or replicating software internally (e.g., doveadm). Yes, I want both of things eventually, but those are outside of the scope of this project.

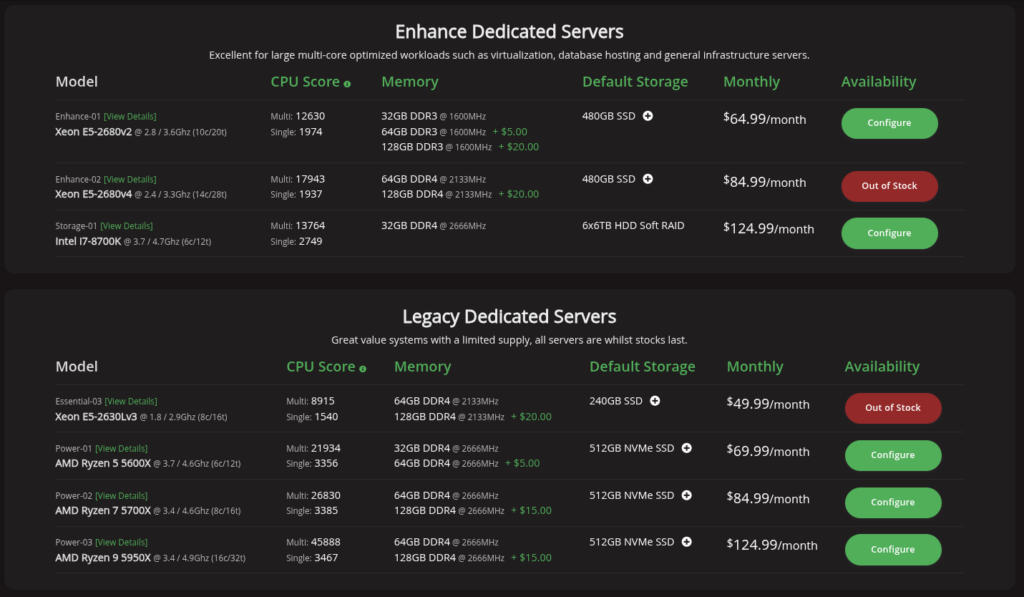

This meant that the first thing to discuss was who the heck actually offers real hosting hardware and /28 or higher on which I can setup a virtualization stack? There are not many options where you can click and get and many dedicated hosting sites have “contact support for pricing” pages. Finding websites/companies where you can say “I want a SuperMicro with XX amount of IPs and YY compute and ZZ ram, Gbps, storage … etc.” is rare. Out of the three sites where there was “buy it now” hardware and tooling, the best was Pebble Host. They specialize in gaming solutions, but also offer dedicated hosting. My Taos box is dual 12-core, or 48 threads. By extension, every Pebble box is single core. They do, however, offer single processors with as many as 36 threads, which is pretty decent. Ultimately, if I paid Pebble Host exactly what I pay Brown Rice, they would give me half to three quarters of the value on average. Originally, I was focused on trying to match my specs at Brown Rice as closely as possible but as you can see below, it just could not happen. I have 376GB of RAM already and use most of it … that and lacking the extra core meant it was impossible to make a prince out of a frog.

However, the offer IP space, have upcoming ipv6 support. And, maybe I was thinking about this wrong. So, after tinkering for a month and setting up a large Xeon at Pebble, including figuring out how their networking and bridging for virtual appliances worked … I decided to rethink this. Instead of trying to match the performance of my Brown Rice box, what if I instead purchased a newer, but smaller compute, box and tried to have it be a bare bones SOS backup for my primary business email server and/or possibly a private cloud or two for an upper tier client, or some such other boutique cases where I wanted quick and new hardware and no upkeep responsibility. In fact, I was already negotiating a business deal for the cloud and was getting sick of my mailserver going down any time I updated etc. Instead of paying nearly $200 per month, I could reduce compute threads but boost compute performance (Ryzen, not Xeon), reduce IP-space, improve and simplify storage (2TB nvme boot) and cut costs in half … and provide more value to me by using less. Not only did I have current contract in the works that fit this need exactly … there was sure to be more in the near future. So, I ended up focusing on the Ryzens instead.

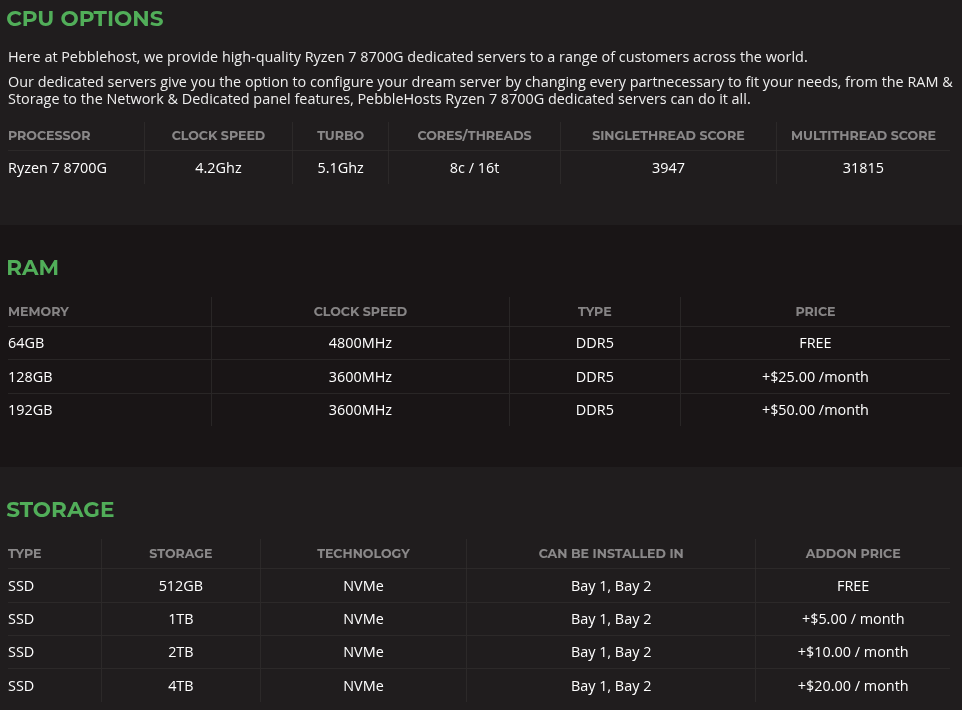

I decided to get the Performance 03 option, or Ryzen 8700 series, with 16 threads (plenty for 4 virtual appliances), 64GB of RAM, and a 2TB HD. Total cost at the time was $88 per month. This was now making sense; each box I get like this could service 4 clients and/or provide 4 nodes for business infra, etc. Lowering the total cost meant that the cost I pass to the consumer would be accordingly lower as well. At this point, nearly two months after the “how do I backup Brown Rice box in case of failure,” I arrived at “let’s use smaller boxes for mission critical services porting and/or for client services.” This is okay. It’s now time for setup. On the bare metal, it’s important to note that I use Debian standard utilities and nothing else; it’s roughly 650MB post install and includes nothing extra. After updating and upgrading, let’s get the bridge setup:

sudo apt install bridge-utilssudo brctl addbr br0sudo nano /etc/sysctl.d/99-sysctl.conf<net.ipv4.ip_forward=1>sudo nano /etc/default/ufwDEFAULT_FORWARD_POLICY="ACCEPT"

Now that our bridge interface is created on the physical host and forwarding enabled at the kernel and firewall level, we can setup our network interface for the host, which includes routing rules for the virtual appliances. I still prefer ifupdown over netplan, although I do know how to use netplan if required. Since my Debian installs were still ifupdown based, I configured /etc/network/interfaces as follows:

auto enp4s0iface enp4s0 inet manual

auto br0

iface br0 inet static

address 44.13.19.10/26

gateway 44.13.19.1

nameservers 8.8.8.8

bridge-ports enp4s0

bridge-stp off

bridge-fd 0

bridge_hw enp4s0

up ip route add 44.13.19.11/32 dev br0

#up ip route add 44.13.19.12/32 dev br0

#and so on ...

There are two things here that I don’t have to do at Brown Rice. The first of those is bridge_hw enp4s0 which ensures that the bridge I created in Debian uses the MAC address of the primary NIC. This is required because Pebble Host whitelists all MAC addresses. Any MAC not approved by them, won’t route. Additionally, for whatever reason, the up ip route add 44.33.19.11/32 dev br0 is strictly required for the virtual appliance to be reachable and/or route. This is not the case in Brown Rice for whatever reason. I was able to piece this together by scouring over their PVE videos and tutorials. Most were out of date, but after a week or so of that and discussions with their team, this recipe proved flawless. In addition to whitelisting your network bridge in Debian, you also need to generate a vMAC on their web panel for your virtual appliance’s IP address and then make sure the appliance uses that MAC only. For me, I simply copied the vMAC from their panel and then ran virsh edit backup.server.qcow2 and scrolled down to the network block and edited the address with the approved MAC. After rebooting the host and starting the virtual appliance, I could confirm the appliance could route using the virsh console. There’s no special setup required inside the ifupdown stanza on the client’s side.

auto enp1s0iface enp1s0 inet staticaddress 44.13.19.11netmask 255.255.255.192gateway 44.13.19.1nameservers 8.8.8.8

After that, I set up an appliance for a client and one for myself and linked both to the larger version control and backup system I have in place and called it a day. Fun exercise and affordable dedicated hosting in place, providing a nice compromise in between co-location and cloud computing.

Thanks, oemb1905